Google has launched its much-anticipated Gemini model, entering the fierce battlefield of artificial intelligence against the reigning champion, GPT-4. The great AI war of 2023 has taken an unexpected twist, leaving tech enthusiasts on the edge of their seats.

In a surprising turn of events, Google has launched its much-anticipated Gemini model, entering the fierce battlefield of artificial intelligence against the reigning champion, GPT-4. The great AI war of 2023 has taken an unexpected twist, leaving tech enthusiasts on the edge of their seats.

Gemini, available in three sizes—Tall, Grande, and Ventti—sets itself apart with its multimodal capabilities, transcending the boundaries of traditional language models. Unlike its predecessor Lambda and palm 2, Gemini goes beyond mere text training, incorporating sound, images, and video into its learning arsenal.

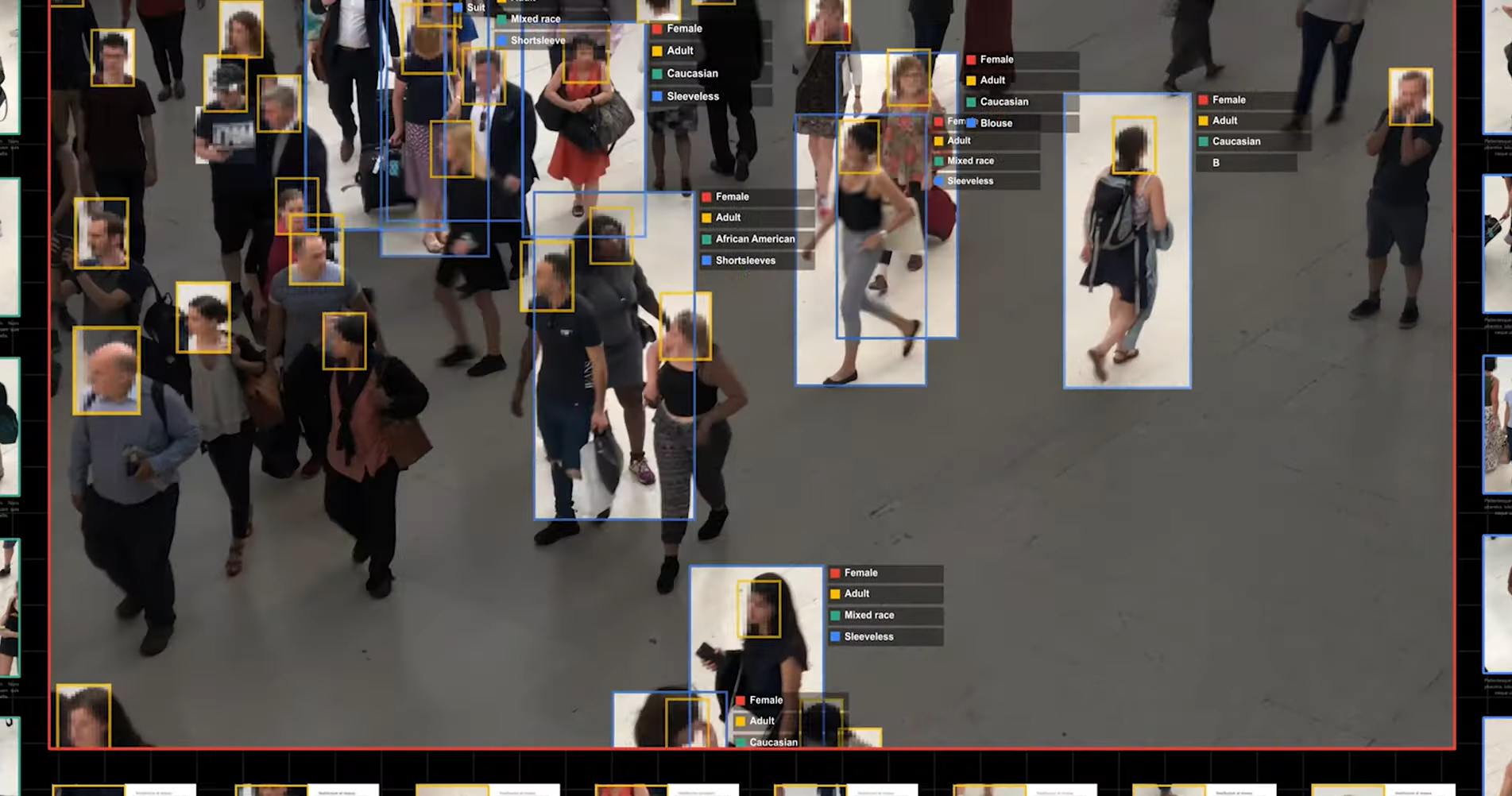

Gemini real time video tracking

Gemini real time video tracking

A standout feature demonstrated by Google showcases Gemini’s real-time video understanding. From recognizing objects in a live video feed to tracking the movement of items, Gemini’s capabilities seem almost magical. It even excels in connect-the-dots challenges, proving its versatility in diverse tasks.

Gemini doesn’t stop at visual and auditory tasks; it flexes its muscles in logic and spatial reasoning. From predicting the speed of cars based on aerodynamics to generating blueprints for civil engineering projects, Gemini showcases its potential to revolutionize various industries.

Notably, Google also introduced Alpha Code 2, a tool designed to outperform 90% of competitive programmers. This development signals a paradigm shift, hinting at potential changes in the landscape of programming competitions.

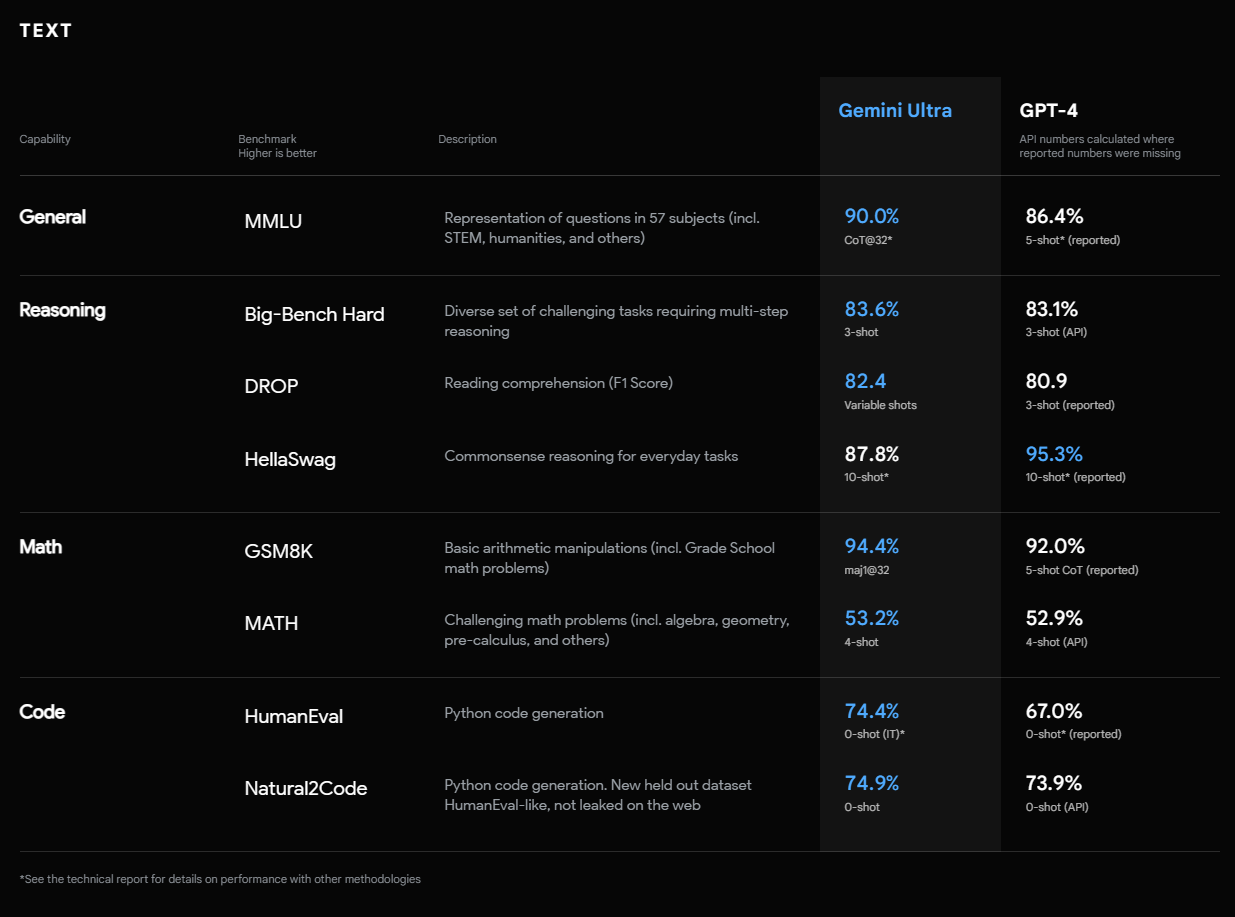

While Gemini Pro shows promise, Gemini Ultra steals the spotlight, outperforming GPT-4 on several benchmarks. Gemini Ultra is the first model to surpass human experts in massive multitask language understanding, a milestone achievement in the AI realm.

Gemini Ultra vs. GPT-4 Benchmark

Gemini Ultra vs. GPT-4 Benchmark

However, the devil is in the details. Gemini Ultra lags behind GPT-4 on the HellaSWAG Benchmark, which evaluates common-sense natural language understanding. This unexpected performance difference raises intriguing questions about the models’ nuanced capabilities.

Google leverages its version 5 tensor processing units in super PODs, each consisting of 4,096 chips. These PODs, equipped with dedicated optical switches, enable efficient data transfer during parallel training. The scale of Gemini Ultra necessitates communication across multiple data centers, highlighting the colossal infrastructure supporting its development.

The training dataset encompasses a vast array of internet content, including web pages, YouTube videos, scientific papers, and books. Google applies reinforcement learning through human feedback to fine-tune the model’s quality, ensuring it meets rigorous standards.

While Gemini Nano and Pro models hit Google Cloud on December 13th, the Gemini Ultra Pro Max is set for release in the coming year. Additional safety tests and achieving a 100% rating on the H-SWAG Benchmark are prerequisites for its public debut.

As the AI war intensifies, Gemini’s entry into the arena adds a new layer of complexity. Will it dethrone GPT-4, or is this merely the beginning of a more profound AI revolution? Stay tuned for further developments in the ever-evolving landscape of artificial intelligence.

Fireship.io. (December 8, 2023). “Gemini: Unveiling Google’s Multimodal AI” [Video]. Retrieved from https://www.youtube.com/watch?v=q5qAVmXSecQ

Google. (December 6, 2023). “Hands-on with Gemini: Interacting with Multimodal AI” [Video]. Retrieved from https://www.youtube.com/watch?v=UIZAiXYceBI